Wget is a command-line, open-source utility to download files and web pages from the internet. It gets data from the internet and displays it in your terminal or saves it to a file. The wget utility is non-interactive. You can get the most out of it through scripts or even schedule file downloads.

Typically, web browsers such as Firefox or Chromium also download files except, by default, they render the information in a graphical window and require a user to interact with them. Alternatively, other Linux system users use the curl command to transfer data from a network server.

The article illustrates how to use the wget command to download web pages and files from the internet.

Installing wget on Linux

To install wget on Ubuntu/Debian based Linux systems:

$ apt-get install wget

To install Wget on Red Hat/CentOS:

$ yum install wget

To install wget on Fedora:

$ dnf install wget

Downloading a file with the wget command

You can download a file with wget by providing a specific link to a URL. If your URL defaults to index.html, then the index page is downloaded. By default, the content downloads to a file with the same filename in your current working directory. The wget command also provides several options to pipe the output to less or tail.

[#####@fedora ~]$ wget http://example.com | tail -n 6 --2021-11-09 12:06:02-- http://example.com/ Resolving example.com (example.com)... 93.184.216.34, 2606:2800:220:1:248:1893:25c8:1946 Connecting to example.com (example.com)|93.184.216.34|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 1256 (1.2K) [text/html] Saving to: ‘index.html.1’ index.html.1 100%[======================>] 1.23K --.-KB/s in 0s 2021-11-09 12:06:03 (49.7 MB/s) - ‘index.html.1’ saved [1256/1256]

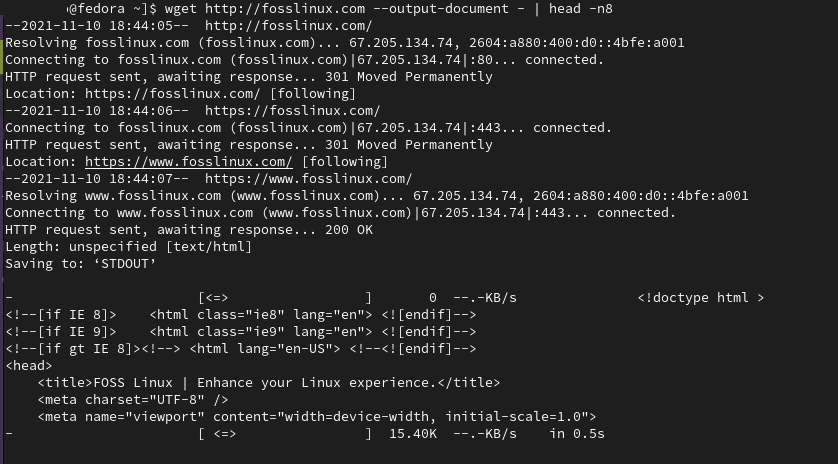

Sending downloaded data to standard output

You can use the -output-document with a dash – character to send your downloaded data to standard output.

wget –output

[#######@fedora ~]$ wget http://example.com --output-document - | head -n8 --2021-11-09 12:17:11-- http://example.com/ Resolving example.com (example.com)... 93.184.216.34, 2606:2800:220:1:248:1893:25c8:1946 Connecting to example.com (example.com)|93.184.216.34|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 1256 (1.2K) [text/html] Saving to: ‘STDOUT’ <!doctype html> 0%[ ] 0 --.-KB/s <html> <head> <title>Example Domain</title> <meta charset="utf-8" /> <meta http-equiv="Content-type" content="text/html; charset=utf-8" /> <meta name="viewport" content="width=device-width, initial-scale=1" /> - 100%[======================>] 1.23K --.-KB/s in 0s 2021-11-09 12:17:12 (63.5 MB/s) - written to stdout [1256/1256]

Saving downloads with a different file name

You can use the –output-document option or -O to specify a different output file name for your download.

$ wget http://fosslinux.com --output-document foo.html $ wget http://fosslinux.com -O foofoofoo.html

Downloading a sequence of files

Wget can download several files if you know the location and file name pattern of the files. You can use Bash syntax to specify a range of integers to represent a sequence of file names from start to end.

$ wget http://fosslinux.com/filename_{1..7}.webp

Downloading multiple pages and files

You can download multiple files with the wget command by specifying all the URLs containing the files to download.

$ wget URL1 URL2 URL3

Resuming a partial download

If you’re downloading large files, there might be interruptions to the download. Wget can determine where your download stopped before it continues with the partial download. It is handy if you’re downloading large files like a Fedora 35 Linux distro ISO. To continue a download, use the –continue or -c option.

$ wget --continue https://fosslinux.com/foss-linux-distro.iso

Managing recursive downloads with the wget command

Use the –recursive or -r option to Turn on recursive downloads with the wget command. The wget recursive mode crawl through a provided site URL and follows all links up to the default or a specified maximum depth level.

$ wget -r fosslinux.com

By default, the maximum recursive download depth is 5. However, wget provides the -l option to specify your maximum recursion depth.

$ wget -r -l 11 fosslinux.com

You can specify infinite recursion with the ‘-l 0’ option. For example, wget will download all the files on a website if you set the maximum depth to zero (-l 0).

Converting links for local viewing

The –convert-links is yet another essential wget option that converts links to make them suitable for local viewing.

$ wget -r l 3 --convert-links fosslinux.com

Downloading Specific File Types

You can use the -A option with the wget command to download specific file types during recursive downloads. For example, use the following wget command to download pdf files from a website.

$ wget -A '*.pdf -r fosslinux.com

Note that the recursive maximum retrieval depth level is limited to 5 by default.

Downloading Files From FTP Server

The wget command can come in handy when you need to download files from an FTP Server.

$ wget --ftp-user=username --ftp-password=password ftp://192.168.1.13/foofoo.pdf

In the above example, wget will download ‘foofoo.pdf’ from the FTP Server located at 192.168.1.10.

You can also use the -r recursive option with the FTP protocol to download FTP files recursively.

$ wget -r --ftp-user=username --ftp-password=pass ftp://192.168.1.13/

Setting max download size with wget command

You can set the max download size during recursive file retrievals using the –quota flag option. You can specify download size in bytes (default), kilobytes (k suffix), or megabytes (m suffix). The download process will be aborted when the limit is exceeded.

$ wget -r --quota=1024m fosslinux.com

Note that download quotas do not affect downloading a single file.

Setting download speed limit with wget command

You can also use the wget –limit-rate flag option to limit download speed when downloading files. For example, the following command will download the ‘foofoo.tar.gz’ file and limits the download speed to 256KB/s.

$ wget --limit-rate=256k URL/ foofoo.tar.gz

Note that you can express the desired download rate in bytes (no suffix), kilobytes (using k suffix), or megabytes (using m suffix).

Mirroring a website with the wget command

You can download or mirror an entire site, including its directory structure with the –mirror option. Mirroring a site is similar to recursive download with no maximum depth level. You can also use the –recursive –level inf –timestamping –no-remove-listing option, which means it’s infinitely recursive.

You can also use wget to archive a site with the –no-cookies –page-requisites –convert-links options. It will download complete pages and ensure that the site copy is self-contained and similar to the original site.

$ wget --mirror --convert-links fosslinux.com $ wget -recursive --level inf --timestamping –no-remove-listing

Note that archiving a site will download a lot of data especially if the website is old.

Reading URLs from a text file

The wget command can read multiple URLs from a text file using the -i option. The input text file can contain multiple URLs, but each URL has to start in a new line.

$ wget -i URLS.txt

Expanding a shortened URL

You can use the wget –max-redirect option to look at shortened URLs before you visit. Shortened URLs are essential for print media or on social networks with character limits. Moreover, Shortened URLs can also be suspicious because their destination is concealed by default.

Note: A better practice involves combining the –head and –location option to view the HTTP headers and unravel the final URL destination. It allows you to peek into a shortened URL without loading the full resource.

[######@fedora ~]$ wget --max-redirect 0 https://t.co/GVr5v9554B?amp=1 --2021-11-10 16:22:08-- https://t.co/GVr5v9554B?amp=1 Resolving t.co (t.co)... 104.244.42.133, 104.244.42.69, 104.244.42.5, ... Connecting to t.co (t.co)|104.244.42.133|:443... connected. HTTP request sent, awaiting response... 301 Moved Permanently Location: https://bit.ly/ [following] 0 redirections exceeded.

Note: The intended destination is revealed on the output line that starts with location.

Modifying HTML headers

HTTP header information is one of the metadata information embedded in the packets that computers send to communicate during data exchange. For example, every time you visit a website, your browser sends HTTP request headers. You can use the –debug option to reveal the header information wget sends to your browser for each request.

[#####@fedora ~]$ wget --debug fosslinux.com DEBUG output created by Wget 1.21.1 on linux-gnu. ---request begin--- GET / HTTP/1.1 User-Agent: Wget/1.21.1 Accept: */* Accept-Encoding: identity Host: fosslinux.com Connection: Keep-Alive ---request end--- HTTP request sent, awaiting response... ---response begin---

Viewing response headers with wget command

You can use the –debug option to view response header information in return responses.

[#####@fedora ~]$ wget --debug fosslinux.com ….. ---request end--- HTTP request sent, awaiting response... ---response begin--- HTTP/1.1 200 OK Server: nginx Date: Wed, 10 Nov 2021 13:36:29 GMT Content-Type: text/html; charset=UTF-8 Transfer-Encoding: chunked Connection: keep-alive Vary: Accept-Encoding X-Cache: HIT ---response end--- 200 OK

Responding to a 301 response code

HTTP response status codes are essential to web administrators. Typically, a 301 HTTP response status code means that a URL has been moved permanently to a different location. By default, wget follows redirects. However, you can use the –max-redirect option to determine what wget does when encountering a 301 response. For example, you can set it to 0 to instruct wget to follow no redirects.

[######@fedora ~]$ wget --max-redirect 0 https://fosslinux.com --2021-11-10 16:55:54-- https://fosslinux.com/ Resolving fosslinux.com (fosslinux.com)... 67.205.134.74, 2604:a880:400:d0::4bfe:a001 Connecting to fosslinux.com (fosslinux.com)|67.205.134.74|:443... connected. HTTP request sent, awaiting response... 301 Moved Permanently Location: https://www.fosslinux.com/ [following] 0 redirections exceeded.

Saving wget verbose output to a log file

By default, wget displays verbose output to the Linux terminal. However, you can use the -o option to log all output messages to a specified log file.

$ wget -o foofoo_log.txt fosslinux.com

The above wget command will save the verbose output to the ‘foofoo_log.txt’ file.

Running wget command as a web spider

You can make the wget command function as a web spider using the –spider option. In essence, it will not download any web pages but will only check that they are there. Moreover, any broken URLs will be reported.

$ wget -r --spider fosslinux.com

Running wget command in the background

You can use the -b / –background option to run the wget process in the background. It is essential if you are downloading large files that will take longer to complete.

$ wget -b fosslinux.com/latest.tar.gz

By default, the output of the wget process is redirected to ‘wget-log’. However, you can specify a different log file with the -o option.

To monitor the wget process, use the tail command.

$ tail -f wget-log

Running wget in debug mode

When you run wget in debug mode, the output includes remote server information like wget request headers and response headers. Request and response headers are essential to system administrators and web developers.

$ wget --debug fosslinux.com

Changing the User-Agent the wget command

You can change the default User Agent with the –user-agent option. For example, you can use ‘Mozilla/4.0’ as wget User-Agent to retrieve fosslinux.com with the following command.

$ wget --user-agent='Mozilla/4.0' fosslinux.com

Learn more wget tips and tricks from the official wget manual pages.

Wrapping up

The Linux wget command provides an efficient way to pull and download data from the internet without using a browser. Just like the versatile curl command, wget can handle any complex download scenario like large file downloads, non-interactive downloads, and multiple file downloads.