If you have been a Linux user or enthusiast for a considerable amount of time, then the term swap or swap memory should not be news to you. But, unfortunately, many Linux users tend to confuse the concept of swap memory with swappiness. The most common misconception is that a swappiness value indicates the maximum usable RAM before the actual swapping process begins.

To burst this widely reported misconception, we have to break down the definition of both swapping and swappiness.

Redeeming swappiness from common misconceptions

From swappiness, the term swapping is conceived. For swapping to take place, RAM (Random Access Memory) has to have some system data. When this data is written off to a dedicated hard disk location like a swap file or a swap partition, the system RAM is freed of some needed space. This freeing up of the system RAM constitutes the definition of swapping.

Your Linux OS contains a swappiness value configuration setting. The existence of this value continues to brew many misconceptions about its intended system functionality. The most common one is its association with the RAM usage threshold. From the definition of swapping, swappiness is misunderstood as the maximum RAM storage value that triggers the onset of swapping.

RAM split zones

To find clarity from the swappiness misconception discussed earlier, we have to start from where this misconception began. First, we need to look at the Random Access Memory (RAM). Our interpretation of the RAM is very different from the Linux OS perception. We see the RAM as a single homogeneous memory entity while Linux interprets it as split memory zones or regions.

The availability of these zones on your machine depends on the architecture of the machine in use. For example, it could be a 32-bit architecture machine or a 64-bit architecture machine. To better understand this split zones concept, consider the following x86 architecture computer zones breakdown and descriptions.

- Direct Memory Access (DMA): Here, the allocatable memory region or zone capacity is as low as 16MB. Its name is related to its implementation. Early computers could only communicate with a computer’s physical memory through the direct memory access approach.

- Direct Memory Access 32 (DMA32): Regardless of this assigned naming convention, DMA32 is a memory zone only applicable to a 64-bit Linux architecture. Here, the allocatable memory region or zone capacity does not exceed 4 GB. Therefore, a 32-bit powered Linux machine can only attain a 4 GB RAM DMA. The only exception from this case is when the Linux user decides to go with the PAE (Physical Address Extension) kernel.

- Normal: The machine RAM proportion above 4GB, by estimate, on 64-bit computer architecture, meets the metric definition and requirements of normal memory. On the other hand, a 32-bit computer architecture defines normal memory between 16 MB and 896 MB.

- HighMem: This memory zone is only evident on 32-bit Linux-powered computer architecture. It is defined as the RAM capacity exceeding 896 MB for small machines and exceeding 4 GB for large machines or ones with performant hardware features and specs.

RAM and PAGESIZE values

Computer RAM allocation is determined in pages. These pages allocation are configured to fixed sizes. The system kernel is the determinant of these fixed-size allocations. The page allocation takes place at system boot time when the kernel detects your computer architecture. On such a Linux computer, the typical page size is about 4 Kbytes.

To determine the page size of your Linux machine, you can make use of the “getconf” command as demonstrated below:

$ getconf PAGESIZE

Running the above command on your terminal should give you an output like:

4096

Zones and Nodes attachments

The discussed memory zones have a direct attachment to the system nodes. The CPU or Central Processing Unit directly associates with these nodes. This node-to-CPU association that the system kernel references when allocating memory is needed by a process scheduled for execution by the same CPU.

These nodes-to-CPU tiers are essential for the installation of mixed memory types. Specialist multi-CPU computers are the primary target of these memory installations. This procedure is only successful when Non-Uniform Memory Access architecture is in use.

With such high-end requirements, a Linux computer, on average, will associate with one specific node. The OS term for it is node zero. This node owns all the available memory zones. These nodes and zones can also be accessed from your Linux OS. First, you will need to access the “/proc/buddyinfo” file. You can use the following command to achieve this objective.

$ less /proc/buddyinfo

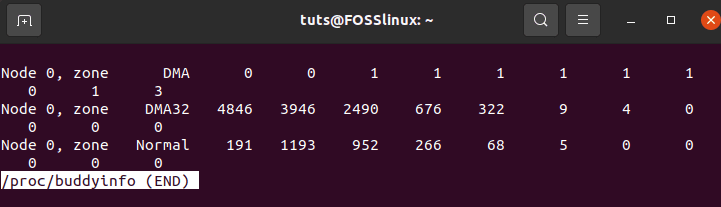

Your terminal output should be similar to the following screenshot.

accessing the buddyinfo file for zones and nodes data

As you can see, from my end, I am dealing with three zones: DMA, DMA32, and Normal zones.

The interpretation of these zones’ data is straightforward. For example, if we go with the DMA32 zone, we can unravel some critical information. Moving from left to right, we can reveal the following:

4846: Memory chunks available can be interpreted as 4846 of 2^(0*PAGESIZE)

3946: Memory chunks available can be interpreted as 3946 of 2^(1*PAGESIZE)

2490: Memory chunks available can be interpreted as 2490 of 2^(2*PAGESIZE)

…

0: Memory chunks available can be interpreted as 0 of 2^(512*PAGESIZE)

The above info clarifies how nodes and zones relate to one another.

File pages vs. anonymous pages

Page table entries provide the memory mapping functionality with the needed means of recording the usage of specific memory pages. For that reason, memory mapping exists in the following functional phases:

File backed: With this type of mapping, data that exists here originates from a file. The mapping does not restrict its functionality to specific file types. Any file type is usable as long as the mapping function can read data from it. The flexibility of this system feature is that a system-freed memory can easily be re-obtained and its data re-used as long as the file containing the data remains readable.

If by chance data changes occur in memory, the hard drive file will need to record the data changes. It should take place before the memory in use is free again. If this precaution fails to take place, the hard drive file will fail to note the data changes that occurred in memory.

Anonymous: This type of memory mapping technique has no device or file backup functionality. The memory requests available on these pages can be described as on-the-fly and are initiated by programs that urgently need to hold data. Such memory requests are also effective when dealing with memory stacks and heaps.

Since these data types are not associated with files, their anonymous nature needs something to function as a reliable storage location instantaneously. In this case, a swap partition or swap file is created to hold these program data. Data will first move to swap before the anonymous pages that held these data are freed.

Device backed: Block device files are used to address system devices. The system regards the device files as normal system files. Here, both reading and writing data are possible. The device storage data facilitates and initiates device-backed memory mapping.

Shared: A single RAM page can accommodate or can be mapped with multiple page table entries. Any of these mappings can be used to access the available memory locations. Whichever the mapping route, the final data display will always be the same. Because the memory locations here are jointly watched, inter-process communication is more efficient through data interchange. Inter-process communications are also highly performant because of shared writable mappings.

Copy on write: This allocation technique is somewhat lazily-oriented. If a resource request occurs and the requested resource already exists in memory, the original resource is mapped to satisfy that request. Also, the resource might be shared by other multiple processes.

In such cases, a process might try to write to that resource. If this write operation is to be successful, a replica of that resource should exist in memory. The resource copy or replica will now accommodate the effectuated changes. In short, it is this first write command that initiates and executes memory allocation.

Out of these five discussed memory mapping approaches, swappiness deals with file-backed pages and anonymous pages memory mapping routines. Hence, they are the first two discussed memory mapping techniques.

Understanding Swappiness

Based on what we have covered and discussed so far, the definition of swappiness can now be easily understood.

In simple terms, swappiness is a system control mechanism that details the intensity of the system kernel aggression in swapping memory pages. A swappiness value is used to identify this system kernel aggression level. Increased kernel aggressiveness is indicated by higher swappiness values, while the swap amount will decrease with lower values.

When its value is at 0, the kernel does not have the authentication to initiate swapping. Instead, the kernel references the file-backed and free pages before initiating swapping. Thus, when comparing swappiness with swap, swappiness is responsible for intensively metering swap up and down. Interestingly, a swappiness value set at zero does not prevent swapping from taking place. Instead, it only stalls swapping as the system kernel waits for some swapping conditions to be viable.

Github provides a more compelling source code description and values associated with swappiness implementation. By definition, its default value is represented with the following variable declaration and initialization.

Int vm_swappiness = 60;

The swappiness value ranges are between 0 and 100. The above Github link points to the source code for its implementation.

The ideal swappiness value

Several factors determine the ideal swappiness value for a Linux system. They include your computer’s hard drive type, hardware, workload, and whether it is designed to function as a server or desktop computer.

You also need to note that the primary role of swap is not to initiate a memory freeing mechanism for a machine’s RAM when the availed memory space is running out. The existence of swap is, by default, an indicator of a healthy functioning system. Its absence would imply that your Linux system has to adhere to insane memory management routines.

The effect of implementing a new or custom swappiness value on a Linux OS is instantaneous. It dismisses the need for a system reboot. Therefore, this window is an opportunity to adjust and monitor the effects of the new swappiness value. These value adjustments and system monitoring should take place over a period of days and weeks until you land on a number that does not affect the performance and health of your Linux OS.

While adjusting your swappiness value, consider the following pointers:

- First, implementing 0 as the set swappiness value does not disable swap functionality. Instead, the system hard drive activity changes from swap-associated to file-associated.

- If you are working under computer hard drives aging or old, reducing the associated Linux swappiness value is recommended. It will minimize the effects of swap partition churn and also prevent anonymous page reclamation. The file system churn will increase when the swap churn reduces. With the increase of one setting causing the decrease of another, your Linux system will be healthier and performant with one effective memory management method instead of producing an average performance with two methods.

- Database servers and other single-purpose servers should have software guidelines from their suppliers. They come with reliable memory management and purpose-designed file cache mechanisms. The providers of this software are mandated to suggest a recommend Linux swappiness value based on the machine’s workload and specifications.

- If you are an average Linux desktop user, it is advisable to stick to the already set swappiness value, especially if you are using reasonably recent hardware.

Working with customized swappiness value on your Linux machine

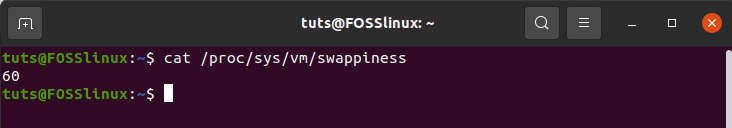

You can change your Linux swappiness value to a custom figure of your choice. First, you have to know the currently set value. It will give you an idea of how much you wish to decrease or increase your system-set swappiness value. You can check the currently set value on your Linux machine with the following command.

$ cat /proc/sys/vm/swappiness

You should get a value like 60 as it is the system’s set default.

Retrieving the default swappiness value on your Linux system

The “sysctl” is useful when you need to change this swappiness value to a new figure. For example, we can change it to 50 with the following command.

$ sudo sysctl vm.swappiness=50

Your Linux system will pick up on this newly set value right away without the need for any reboot. Restarting your machine resets this value to the default 60. The use of the above command is temporary because of one main reason. It enables the Linux users to experiment with the swappiness values they have in mind before deciding on a fixed one they intend to use permanently.

If you want the swappiness value to be persistent even after a successful system reboot, you will need to include its set value in the “/etc/sysctl.conf” system configuration file. For demonstration, consider the following implementation of this discussed case via the nano editor. Of course, you can use any Linux-supported editor of your choice.

$ sudo nano /etc/sysctl.conf

When this configuration file opens on your terminal interface, scroll to its bottom and add a variable declaration line containing your swappiness value. Consider the following implementation.

vm.swappiness=50

Save this file, and you are good to go. Your next system reboot will use this new set swappiness value.

Final note

The complexity of memory management makes it an ideal role for the system kernel as it would be too much of a headache for the average Linux user. Since swappiness is associated with memory management, you might overestimate or think you are using too much RAM. On the other hand, Linux finds free RAM ideal for system roles like disk caching. In this case, the “free” memory value will be artificially lower and the “used” memory value artificially higher.

Practically, this proportionality of free and used memory values is disposable. Reason? Free RAM that assigns itself to be a disk cache is retrievable at any system instance. It is because the system kernel will flag it as both available and re-usable memory space.

2 comments

TMI – Too Much Information

(unless you get paid by the word)

I was more even more confused after reading the article than before. This dissertation is more appropriate to a Computer Science course than an informational article meant for the average user.

Excellent tutorial with precise explanation of what the consequences of the changes will be.